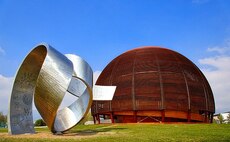

Large Hadron Collider faces large storage problems as the pace of technology can't keep up

Increasing storage costs and slowing innovation has spurred Cern to re-think its data storage model as its Large Hadron Collider (LHC) is expected to create 400 petabytes (PB) of scientific data pe...

To continue reading this article...

Join Computing

- Unlimited access to real-time news, analysis and opinion from the technology industry

- Receive important and breaking news in our daily newsletter

- Be the first to hear about our events and awards programmes

- Join live member only interviews with IT leaders at the ‘IT Lounge’; your chance to ask your burning tech questions and have them answered

- Access to the Computing Delta hub providing market intelligence and research

- Receive our members-only newsletter with exclusive opinion pieces from senior IT Leaders